The Design Principle of PyTorch Architecture

The article introduces the detailed principles that drive the implementation of PyTorch and how these principles are reflected in the PyTorch architecture.

Design principles

PyTorch’s success stems from weaving previous ideas into a design that balances speed and ease of use. There are four main principles behind our choices:

Be Pythonic: Data scientists are familiar with the Python language, its programming model, and it stools.

Put researchers first: PyTorch strives to make writing models, data loaders, and optimizers as easy and productive as possible.

Provide pragmatic performance: To be useful, PyTorch needs to deliver compelling performance,although not at the expense of simplicity and ease of use.

Worse is better: Given a fixed amount of engineering resources, and all else being equal, the time saved by keeping the internal implementation of PyTorch simple can be used to implement additional features, adapt to new situations, and keep up with the fast pace of progress in the field of AI. Therefore it is better to have a simple but slightly incomplete solution than a comprehensive but complex and hard to maintain design.

Usability Centric Design

Deep learning models are just Python programs

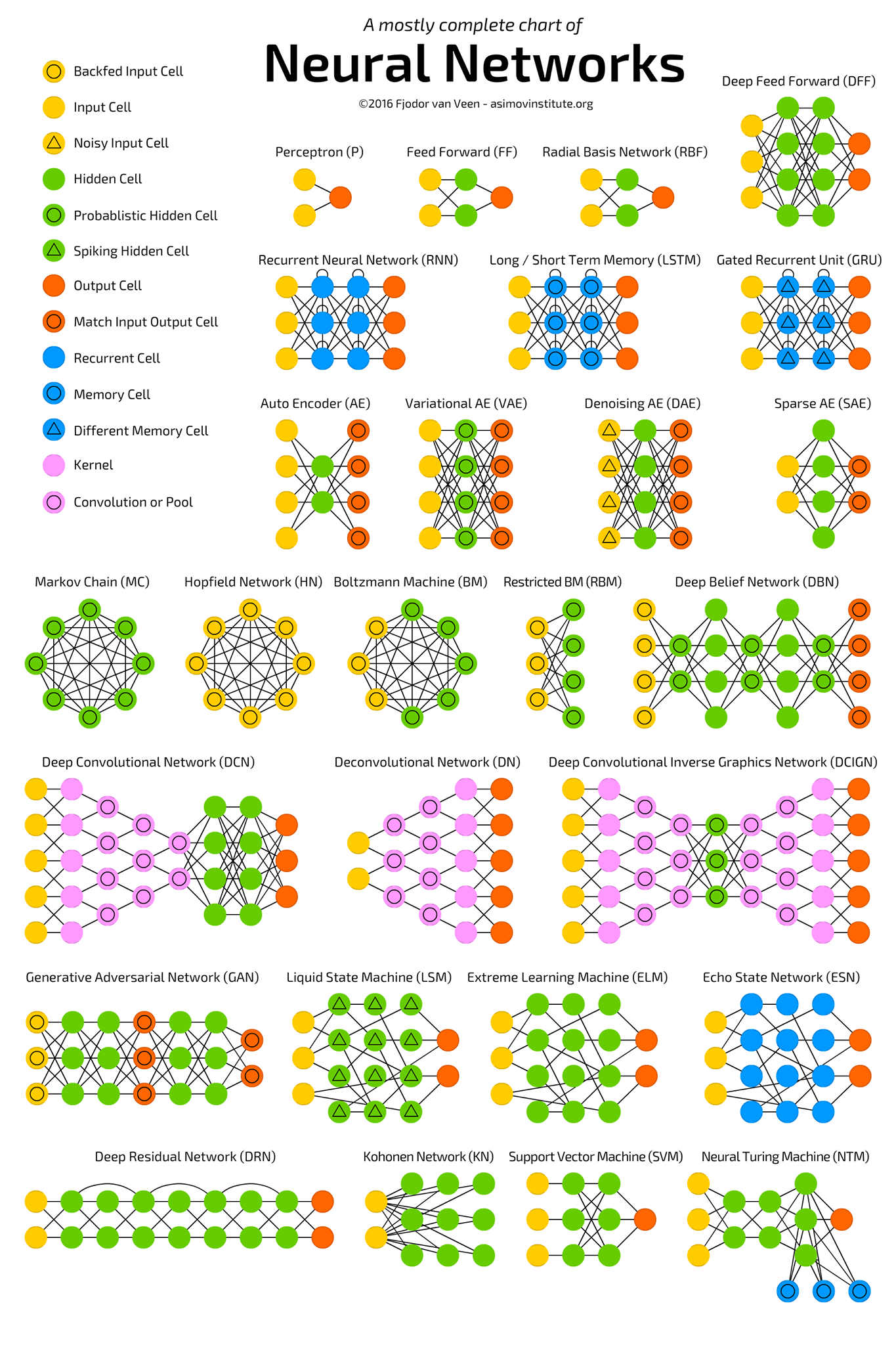

The neural networks themselves evolved rapidly from simple sequences of feed forward layers into incredibly varied numerical programs often composed of many loops and recursive functions. To support this growing complexity, PyTorch foregos the potential benefits of a graph-meta programming based approach to preserve the imperative programming model of Python.

PyTorch extends this to all aspects of deep learning workflows. Defining layers, composing models, loading data, running optimizers, and parallelizing the training process are all expressed using the familiar concepts developed for general purpose programming. This solution ensures that any new potential neural network architecture can be easily implemented with PyTorch.

Interoperability and extensibility

Easy and efficient interoperability is one of the top priorities for PyTorch because it opens the possibility to leverage the rich ecosystem of Python libraries as part of user programs. Hence, PyTorch allows for bidirectional exchange of data with external libraries.

- For example, it provides a mechanism to convert between NumPy arrays and PyTorch tensors using the

torch.from_numpy()function and.numpy()tensor method. - Similar functionality is also available to exchange data stored using the DLPack format.

Automatic differentiation

In its current implementation, PyTorch performs reverse-mode automatic differentiation, which computes the gradient of a scalar output with respect to a multivariate input. Differentiating functions with more outputs than inputs is more efficiently executed using forward-mode automatic differentiation, but this use case is less common for machine learning applications.

Common Code Snippets

Configuration

1 | # PyTorch version |

Tensor

1 | # Tensor Information |

Reference

- Official Resources

- Zhihu:

- Github

The Design Principle of PyTorch Architecture

http://vincentgaohj.github.io/Blog/2021/01/17/The-Design-Principle-of-Pytorch-Architecture/