AWS Solution Architect(Associate) - Topic 7: HA Architecture

High availability protect against data center, availability zone, server, network and storage subsystem failures to keep your business running without downtime.

HA Architecture

Load Balancers

Elastic Load Balancing automatically distributes incoming application traffic across multiple targets, such as Amazon EC2 instances, containers, IP addresses, Lambda functions, and virtual appliances. It can handle the varying load of your application traffic in a single Availability Zone or across multiple Availability Zones.

Load Balancer Types

- Application Load Balancer

- Application Load Balancer is best suited for load balancing of HTTP and HTTPS traffic and provides advanced request routing targeted at the delivery of modern application architectures, including microservices and containers.

- Application Load Balancer routes traffic to targets within Amazon VPC based on the content of the request.

- Network Load Balancer

- Network Load Balancer is best suited for load balancing of Transmission Control Protocol (TCP), User Datagram Protocol (UDP), and Transport Layer Security (TLS) traffic where extreme performance is required.

- Network Load Balancer routes traffic to targets within Amazon VPC and is capable of handling millions of requests per second while maintaining ultra-low latencies.

- Gateway Load Balancer

- Gateway Load Balancer makes it easy to deploy, scale, and run third-party virtual networking appliances. Providing load balancing and auto scaling for fleets of third-party appliances, Gateway Load Balancer is transparent to the source and destination of traffic.

- This capability makes it well suited for working with third-party appliances for security, network analytics, and other use cases.

- Classic Load Balancer

- Classic Load Balancer provides basic load balancing across multiple Amazon EC2 instances and operates at both the request level and the connection level. Classic Load Balancer is intended for applications that were built within the EC2-Classic network.

Detail need to notice

- 504 Error means the gateway has timed out. This means that the application not responding within the idle timeout period.

- Trouble shoot the application. Is it the Web Server Layer or Database Server Layer?

- If you need the IPv4 addresses of your end user, look for the X-Forwarded-For header.

- Instance monitored by ELB are reported as InService, or OutofService

- Health Checks check the instance health by talking to it

- Load Balances (Application load balancer and classic load balancer) have their own DNS name. You are never given an IP address.

- You can get a static IP address for network load balancer.

Advanced Load Balancer Theory

Sticky Sessions

This feature is useful for servers that maintain state information in order to provide a continuous experience to clients. To use sticky sessions, the client must support cookies.

Classic Load Balancer routes each request independently to the registered EC2 instance with the smallest load.

Sticky sessions allow you to bind a user’s session to a specific EC2 instance. This ensures that all requests from the user during the session are sent to the same instance.

You can enable Sticky Sessions for Application Load Balancers as well, but the traffic will be sent at the Target Group Level.

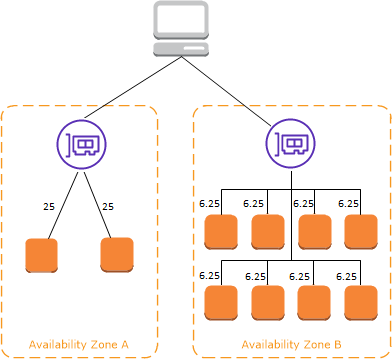

Cross Zone Load Balancing

Cross Zone Load Balancing enables you to load balance across multiple availability zones

With cross-zone load balancing, each load balancer node for your Classic Load Balancer distributes requests evenly across the registered instances in all enabled Availability Zones. If cross-zone load balancing is disabled, each load balancer node distributes requests evenly across the registered instances in its Availability Zone only.

For more information, see Cross-zone load balancing in the Elastic Load Balancing User Guide.

Path Patterns

Path patterns allows you to direct traffic to different EC2 instances based on the URL contained in the request

- You can create a listener with rules to forward requests based on the URL path.

- This is known as path-based routing.

- If you are running micro-services, you can route traffic to multiple back-end services using path-based routing.

- For example, you can route general requests to one target group and requests to render images to another target group.

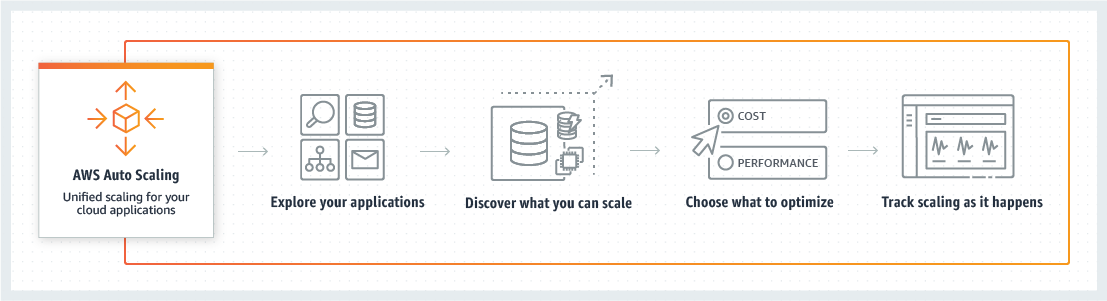

Auto Scaling

AWS Auto Scaling monitors your applications and automatically adjusts capacity to maintain steady, predictable performance at the lowest possible cost.

Three Components

- Groups

- Logical Component

- Web-server group or Application group or Database group etc.

- Configuration Templates

- Groups uses a launch template or a launch configuration as a configuration template for its EC2 instance.

- You can specify information such as the AMI ID, instance type, key pair, security groups, and block device mapping for your instances.

- Scaling Options

- Scaling Options provides several ways for you to scale your Auto Scaling Groups.

- For example, you can configure a group to scale based on the occurrence of specified conditions (dynamic scaling) or on a schedule.

Five Options

- Maintain current instance levels at all times

- Maintain a specified number of running instances at all times

- Scale manually

- Specify only the change in the maximum, minimum, or desired capacity of your Auto Scaling Group

- Scale based on a schedule

- Perform automatically as a function of time and date

- Scale based on demand

- Using scaling policies - lets you define parameters that control the scaling process.

- You can stabilize the CPU utilization of the Auto Scaling Group to stay at around 50 percent when the load on the application changes.

- Use predictive scaling

- You can also use Amazon EC2 Auto Scaling in combination with AWS Auto Scaling to scale resources across multiple services.

HA Architecture

Everything fails. Everything. You should always plan for failure.

- Always Design for failure

- Use Multiple AZ’s and Multiple Regions where ever you can

- Know the difference between Multi-AZ and Read Replicas for RDS.

- Multi-AZ is for disasters

- Read Replicas for performance

- Know the difference between scaling out and scaling up

- Scaling Out is using auto scaling groups to add additional instance

- Scaling Up is where we increase the resources inside out EC2 instance, like from t-2 micro to 6-x extra large

- Read the question carefully and always consider the cost element

- Know the different S3 storage classes

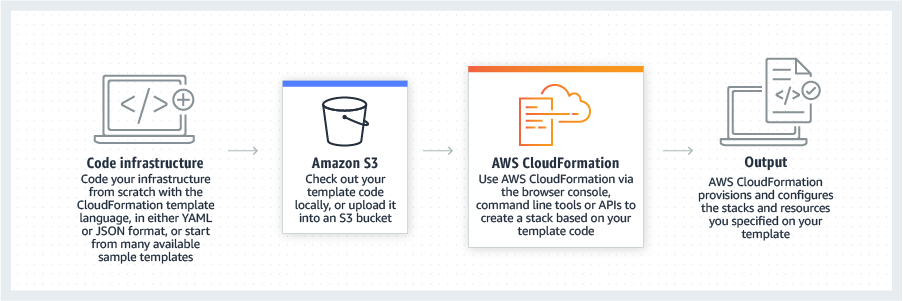

Cloud Formation

AWS CloudFormation gives you an easy way to model a collection of related AWS and third-party resources, provision them quickly and consistently, and manage them throughout their lifecycles, by treating infrastructure as code.

- CloudFormation is a way of completely scripting your cloud environment.

- Quick Start is a bunch of Cloud Formation templates already built by AWS Solutions Architects allowing you to create complex environments very quickly.

Build serverless applications with SAM

Build serverless applications faster with the AWS Serverless Application Model (SAM), an open-source framework that provides shorthand syntax to express functions, APIs, databases, and event source mappings. With just a few lines per resource, you can define the application you want and model it using YAML. During deployment, SAM transforms and expands the SAM syntax into CloudFormation syntax.

Elastic Beanstalk

AWS Elastic Beanstalk is an easy-to-use service for deploying and scaling web applications and service.

With Elastic Beanstalk, you can quickly deploy and manage applications in the AWS Cloud without worrying about the infrastructure that runs those applications. You simply upload your application, and Elastic Beanstalk automatically handles the details of capacity provisioning, load balancing, scaling, and application health monitoring.

- Scaling: AWS Elastic Beanstalk leverages Elastic Load Balancing and Auto Scaling to automatically scale your application in and out based on your application’s specific needs. In addition, multiple availability zones give you an option to improve application reliability and availability by running in more than one zone.

- Customization: With AWS Elastic Beanstalk, you have the freedom to select the AWS resources, such as Amazon EC2 instance type including Spot instances, that are optimal for your application. Additionally, Elastic Beanstalk lets you “open the hood” and retain full control over the AWS resources powering your application. If you decide you want to take over some (or all) of the elements of your infrastructure, you can do so seamlessly by using Elastic Beanstalk’s management capabilities.

CloudFormation vs Beanstalk

Elastic Beanstalk really is aimed at developers who just want to get their stuff into the AWS Cloud quickly. They don’t want to have to go and learn something like CloudFormation which is a way more powerful tool than Elastic Beanstalk.

Highly Available Bastion

The bastion hosts provide secure access to Linux instances located in the private and public subnets of your virtual private cloud (VPC).

High Availability with Bastion Hosts

- Two hosts in two separate Availability Zones. Use a Network Load Balancer with static IP addresses and health checks to fail over from one host to the other.

- Can’t use an Application Load Balancer, as it is layer 7 and you need to use layer 4.

- One host in one Availability Zone behind an Auto Scaling group with health checks and a fixed EIP (Elastic IP). If the host fails, the health check will fail and the Auto Scaling group will provision a new EC2 instance in a separate Availability Zone. You can use a user data script to provision the same EIP to the new host. This is the cheapest option, but it is not 100% fault tolerant.

On-Promise Services with AWS

You need to be aware of what high-level AWS services you can use on-promises for the exam:

- Database Migration Service (DMS)

- Server Migration Service (SMS)

- AWS Application Discovery Service

- VM Import/Export

- Download Amazon Linux 2 as an ISO

Database Migration Service (DMS)

- Allows you to move databases to and from AWS

- Might have your DR environment in AWS and your on-promises environment as your primary.

- Works with most popular database technologies, such as Oracle, MySQL, DynamoDB, etc.

- Supports homogeneous (Oracle -> Oracle) migrations and heterogeneous (SQL -> Aurora) migrations.

Server Migration Service (SMS)

- Supports Server Migration Service supports incremental replication of your on-promises servers in to AWS.

- Can be used as a backup tool, multi-site strategy (on-premises and off-premises), and a DR tool.

AWS Application Discovery Service

- Helps enterprise customers plan migration projects by gathering information about their on-premises data centers.

- You can install the AWS Application Discovery Agent-less Connector as a virtual appliance on VMware vCenter.

- It will then build a server utilization map and dependency map of your on-premises environment.

- The collected data is retained in encrypted format in an AWS Application Discovery Service data store. You can export this data as a CSV filer and use it to estimate the Total Cost of Ownership (TCO) of running on AWS and to plan your migration to AWS.

- This data is also available in AWS Migration Hub, where you can migrate the discovered servers and track their progress as they get migrated to AWS.

VM Import/Export

- Migrate existing applications in to EC2

- Can be used to create a DR strategy on AWS as a second site.

- You can also use it to export your AWS VMs to your on-premises data center.

Download Amazon Linux 2 as an ISO

- Works with all major virtualization providers, such as VMware. Hyper-V, KVM, VirtualBox (Oracle), etc.

References

AWS Architecture Blog

AWS Compute Blog

Networking & Content Delivery

AWS News Blog

Dive Into Exam

1) You have a website with three distinct services, each hosted by different web server autoscaling groups. Which AWS service should you use?

- Answer: Application Load Balancers (ALB)

- Explanation: The ALB has functionality to distinguish traffic for different targets (mysite.co/accounts vs. mysite.co/sales vs. mysite.co/support) and distribute traffic based on rules for target group, condition, and priority.

2) You have been tasked with creating a resilient website for your company. You create the Classic Load Balancer with a standard health check, a Route 53 alias pointing at the ELB, and a launch configuration based on a reliable Linux AMI. You have also checked all the security groups, NACLs, routes, gateways and NATs. You run the first test and cannot reach your web servers via the ELB or directly. What might be wrong?

- Answer: The launch configuration is not being triggered correctly.

- Explanation: In a question like this you need to evaluate if all the necessary services are in place. The glaring omission is that you have not built an autoscaling group to invoke the launch configuration you specified. The instance count and health check depend on instances being created by the autoscaling group. Finally, key pairs have no relevance to services running on the instance.

3) You work for a major news network in Europe. They have just released a new mobile app that allows users to post their photos of newsworthy events in real-time. Your organization expects this app to grow very quickly, essentially doubling its user base each month. The app uses S3 to store the images, and you are expecting sudden and sizable increases in traffic to S3 when a major news event takes place (as users will be uploading large amounts of content.) You need to keep your storage costs to a minimum, and you are happy to temporally lose access to up to 0.1% of uploads per year. With these factors in mind, which storage media should you use to keep costs as low as possible?

- Answer: S3 Standard-IA

- Explanation: The key drivers here are availability and cost, so an awareness of cost is necessary to answer this. Full S3 is quite expensive at around $0.023 per GB for the lowest band. S3 standard IA is $0.0125 per GB, S3 One Zone-IA is $0.01 per GB, and Legacy S3-RRS is around $0.024 per GB for the lowest band. Of the offered solutions S3 One Zone-IA is the cheapest suitable option. Glacier cannot be considered as it is not intended for direct access, however it comes in at around $0.004 per GB. S3 has an availability of 99.99%, S3-IA has an availability of 99.9% while S3-1 Zone-IA only has 99.5%.

- Answer: No.

- Explanation: The secondary database is to be thought of as a DR site, it will be active only when the primary fails, as per the documentation. You need read replicas to increase your read speed. https://aws.amazon.com/rds/details/multi-az/

AWS Solution Architect(Associate) - Topic 7: HA Architecture

http://vincentgaohj.github.io/Blog/2021/03/02/AWS-Solution-Architect-Associate-7-HA-Architecture/